Screen Space Ambient Occlusion

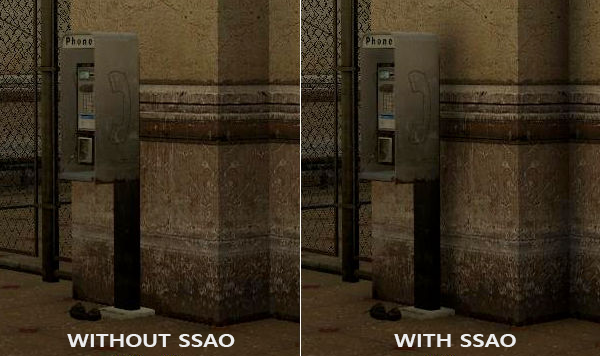

When lighting a scene in a way that is similar to real life you want to know how much light can get to a point in space, this is important for small corners and crevices where light photons have a hard time getting to. Ambient Occlusion algorithms are ways to approximate how much light can reach such points, and in this particular implementation I will be focusing on a view space (Screen Space) algorithm.

The algorithm runs in a single fragment shader where it samples a random kernel around every fragment for depth values to calculate an occlusion factor, this result is later used in a lighting pass to lower the ambient component by this factor. I don't want to make this post too long since the technique itself is quite old by now and there are tons of resources already on implementing it.

Now some for some things I personally encountered while implementing this technique. From what I read at first the algorithm does not play nice with normals calculated from a normal map but it turned out that it does not matter in my case since I store these normals in a separate texture as part of the G-buffer. This means the normals are read and stored per fragment, still enabling normal mapping and avoiding SSAO noise.

Most implementations I found had a per-fragment position texture calculated for view space or reconstructed the view position vector from depth. My renderer follows the Unreal principle of calculating everything in world space for best performance, but this meant I had to convert the world space position to view space for every SSAO shader fragment, which could decrease performance.

Lastly I did not really notice the effect at first, and found that the occlusion factor would brighten the entire scene. This happens when the factor is > 1.0 thus increasing the ambient component in my HDR lighting pass, so don't forget to clamp!

Comments

Post a Comment