For the past couple months I've been working on a small project that allows users to integrate ray traced shadows in an OpenGL C++ application through Vulkan. It generates a screen space shadow texture that's exported to OpenGL using external memory handles (for now Windows only, though I do believe that Vulkan has enums for Linux memory export).

Having implemented basic shadow mapping and CSM in other personal projects I wanted to do this short write-up to share my thoughts and findings on old and new shadow techniques. We'll only be looking at single directional light techniques, so no local lights. Don't worry if you don't understand everything in this post, it's meant to show the disadvantages of shadow mapping compared to ray tracing and rant about my implementation, not a tutorial.

Shadow Mapping

I think that a single shadow map's biggest benefit is ease of setup in a rasterized renderer:

|

Rendered depth from the lights' point of view

|

You render the scene from the light's perspective with only depth write enabled. Comparing these depth values to the camera's rendered depth you get simple, sharp shadows. This introduces a handful of problems: Sampling from the shadow depth texture is resolution dependent and will cause very low resolution shadows, especially if you're trying to fit it to a large camera view.

Cascaded Shadow Mapping

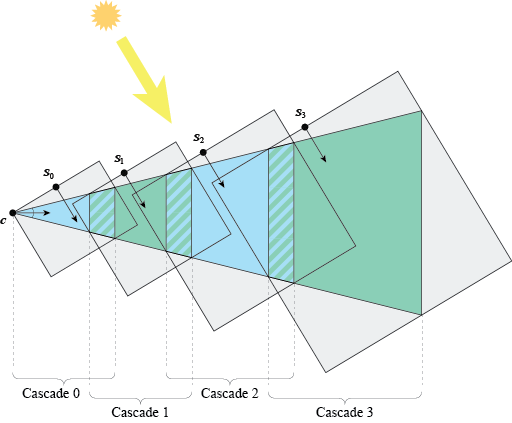

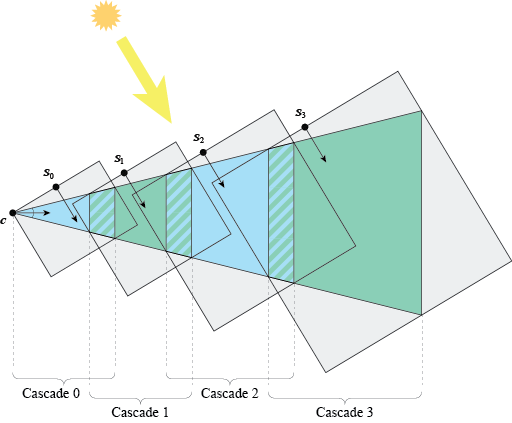

This is where Cascaded Shadow Mapping comes in. We split the camera's view up in multiple slices based on distance.

|

Camera frustrum split up in 4 cascades

|

This way we get higher quality shadow maps up close. However, it requires rendering the scene multiple times, eating up performance. To combat this I perform simple frustrum culling for each cascade, discarding objects not in view for that particular cascade. This removes a lot of the overhead, especially for slices close to the camera.

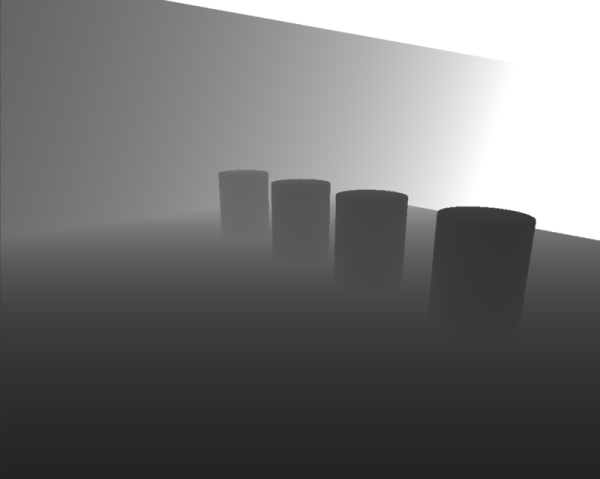

This effectively solves the resolution problem. CSM alone has other problems, like seams and noticeable transitions between cascades. As for shadow mapping in general, when the angle between the light and a steep surface is small you'll get whats called "shadow acne":

|

| Shadow acne |

To prevent this from happening we have to apply a slight bias value to scene depth > shadow depth comparisons. When this bias value gets too big you get what's called peter panning, shadows look detached from their objects. A simple way to fix this is enabling front face culling. So yeah, problem -> fix -> problem -> fix. Shadow map problems effectively boil down to rasterization limitations.

Ray Traced Shadows

With the launch of Nvidia's RTX cards we got hardware level support for ray tracing calculations in the form of RT cores, getting closer to making real-time ray tracing a reality. Since then AMD also released a new line of cards with some form of hardware accelerated ray tracing and graphics APIs like DX12 and Vulkan both have RT functionality added to the spec. However, it is still limited to a hybrid approach: We're still years away from fully path traced rendering in real-time and have to perform most algorithms at lower resolutions, single ray per pixel, temporally accumulated. These algorithms encompass single parts of the final image like reflections, global illumination, ambient occlusion, and of course shadows.

My Implementation

My application/library called Scatter tries to be a plug and play integration of ray traced shadows into an OpenGL renderer. The actual program is quite small and simple; it calculates the scene's world position at a given pixel, traces a single ray in the opposite direction of a directional light and checks for intersection. If none were found along the ray, the pixel is considered lit. Scatter only has access to depth at a given pixel, so it needs the inverse camera matrices to reconstruct the world position:

vec3 reconstructPosition(in vec2 uv, in float depth, in mat4 InvVP) {

float x = uv.x * 2.0f - 1.0f;

float y = (uv.y) * 2.0f - 1.0f; // uv.y * -1 for d3d

float z = depth * 2.0 - 1.0f;

vec4 position_s = vec4(x, y, z, 1.0f);

vec4 position_v = InvVP * position_s;

vec3 div = position_v.xyz / position_v.w;

return div;

}

This reads the depth value at the current pixel, creates a screen position out of it using the pixel's relative location on the screen (uv) in the range of -1 to 1. Multiplying the screen position by the inverse camera matrices converts it back from screen space to world space.

The Vulkan application has a ray generate and ray miss shader. In the generate shader we reconstruct the position, set the ray to start at that position, and set the direction to be the opposite of the light direction. We assume everything is in shadow by setting a boolean value. This boolean is passed with the ray, so when no intersections happen it reaches the miss shader and we set it to false. Based on the bool's value we write either white or black to a full screen shadow texture. This results in pixel perfect hard shadows, but somehow we still get acne?

|

Scatter integrated into ScatterGL, host application written by my project partner.

|

Because of floating point inaccurary at the lower decimals our positions are slightly off at every pixel, making the ray self intersect. To fix this I move the starting position a tiny bit along it's normal. Scatter should work in screen space in any renderer as long as you provide it the scene's depth texture so we need to approximate the normal by reading positions from neighbouring pixels. We then take the cross product of the direction between the sampled positions. This will cause artifacts at the edges of objects because the neighbouring pixels might be a completely different object/depth/position. Because we have access to the depth texture we sample a neighbour horizontally and vertically next to the current pixel, rejecting either axis based on the difference in depth.

|

Scatter integrated in Raekor

|

Soft Shadows

My implementation produces very sharp hard shadows with the only impacting factor being screen resolution. But what about soft shadows? Shadows in real life experience a soft drop-off called the penumbra. Approximating a shadow's penumbra using shadow mapping is a whole separate (headache inducing) topic that I won't be covering, I recommend looking into Nvidia's PCSS algorithm.

With ray tracing its relatively simple in theory. A directional light usually represent an object like the sun so tracing a single ray doesn't make sense physically. The sun is a giant sphere that emits light in every direction. To approximate this we need to trace a fixed amount of rays over the hemisphere facing our virtual world. To simplify this algorithm, instead of a hemisphere we treat the sun as a disk, and calculate the ray's direction as

vec2 direction = (sun.center + randomOnDisk()) - surface.position

As this is a form of Monte Carlo Integration, it's important that the added offset is random per pixel, per frame. I over-simplified a lot here and disk sampling does take away some handy parameters for artists. See Ray Tracing Gems (Nvidia, 2019) for better solutions, like taking in the cone angle to configure the width of the penumbra.

I mentioned previously tracing multiple rays is expensive and is generally avoided, soft shadows is no different. This is where limitations hit for Scatter. Modern solutions sample a single random sun ray per pixel every frame and accumulate results over multiple frames. This is done through temporal reprojection, which requires last frame's camera matrices and a full screen texture storing motion vectors. Using the matrices and motion vectors we can determine where the current pixel was last frame and accumulate results. Because Scatter targets older applications that still use OpenGL we can't assume the renderer to produce these resources as it would greatly limit who can use the library.

Final Thoughts

The current implementation works suprisingly well, there might be some bugs because of GPU synchronization between OpenGL and Vulkan. I think a separate library just for acceleration structures could be a major contribution to the future of ray tracing in Vulkan, though, one size never fits all in graphics. In the future I'd like to implement ray traced soft shadows, albeit multiple rays per pixel and accumulated over frames using a simple moving average. If I ever get around to implementing motion vectors in Raekor (my engine project) I'll explore a more modern implementation. For now, the future is here, but not really!

Comments

Post a Comment